Project information

- Client: chist-era

- Project URL: https://www.chistera.eu/projects/soon

- Publication: https://ieeexplore.ieee.org/document/9569644

- Categories: Reinforcement Learning, Multi-Agents, Hyperparameter Optimization, Curriculum Learning

- Main technologies: Python, Ray RLlib, Stable-Baselines, SimPy, Ray Tune (Unity-ML Agents)

Summary

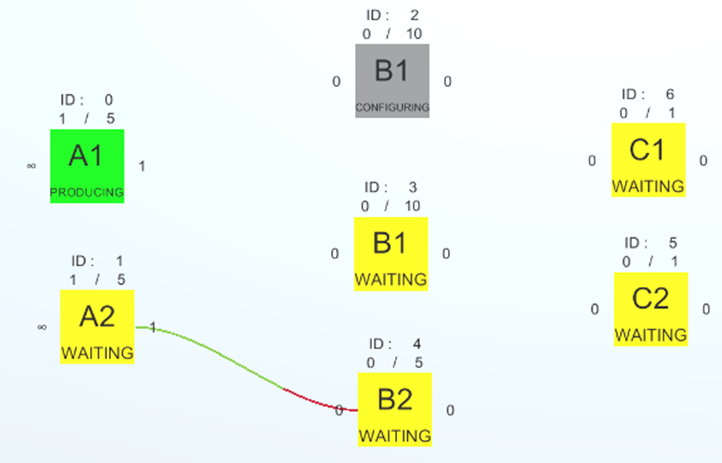

SOON-RL is a component of the SOON social network machine project that utilizes reinforcement learning in a multi-agent environment to optimize workshop production. The objective of the project was to efficiently produce a specific order by training multiple agents to complete the necessary tasks. The workshop is composed of machines specialized in different tasks and follows a sequence of steps to produce a component. The machines needed to optimize production to achieve the order as quickly as possible while also responding to machine failures.

To achieve this, a simulation environment was developed using Python with Gym and SimPy. Initially, Stable Baselines was used, but the project shifted to Ray RLlib to support multi-agent training. Hyperparameters and rewards were optimized using Ray Tune. At the project's conclusion, the machines were able to learn and complete the order in the minimum number of steps, which were calculated manually to confirm the best time.

Details

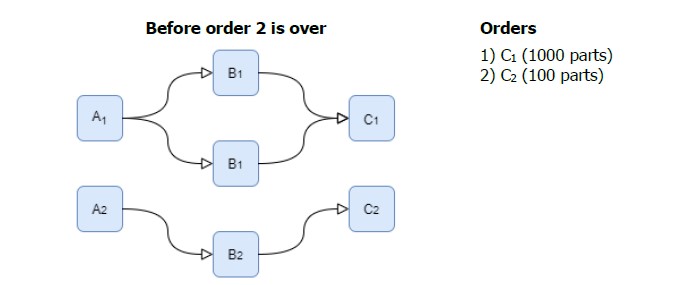

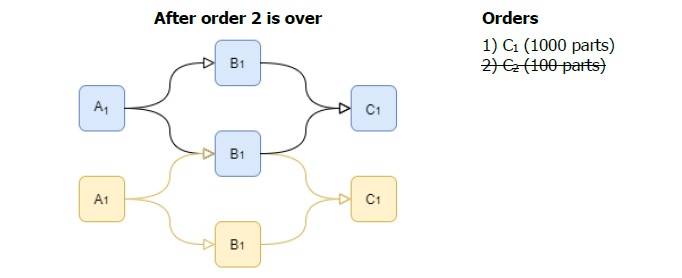

The primary objective of this project was to optimize a workshop production using Reinforcement Learning. The workshop consists of several machines that produce parts in a sequence to fulfill one or more orders. For example, creating a finished part may require a sequence of production steps such as A1 → B1 → C1. The machines needed to work collaboratively to complete orders in different scenarios of varying complexity.

The machines were programmed to perform one of four possible actions - Wait, Reconfigure (e.g. change produced type from A1 to A2), Get Pieces from the storage, or Produce. They were aware of the recipe for creating orders, the amount of available parts in the storage, the current order that needs to be fulfilled, the state of each machine, and the amount of completion of parts.

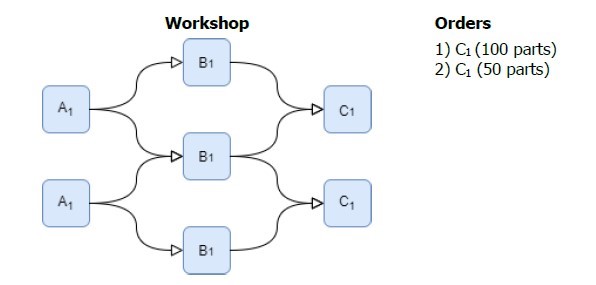

The first scenario involved producing a single type of parts to fulfill an order (A1 → B1 → C1). When a new order arrived, it was either for the same parts as the ones currently being produced (C1), or for a different type of part, requiring some machines to be reconfigured.

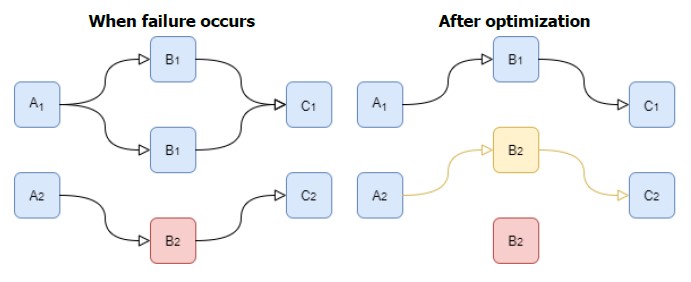

In the second scenario, the machines executed an order until a breakdown occurred. Reconfigurations were necessary to compensate for the defective machine.

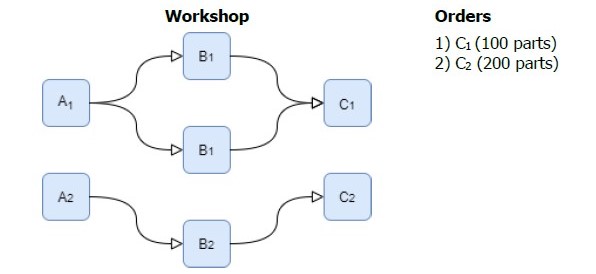

The third scenario involved simultaneous production of two orders, with the first taking a long time to complete. Reconfigurations were done to help finish the first production quicker after the second order was over.

The project used Reinforcement Learning techniques to optimize the machines actions in each scenario. The machines were trained to learn how to perform actions that would fulfill orders efficiently, despite possible breakdowns and reconfigurations. The project was done in Python and Reinforcement Learning libraries such as Gym and Ray RLlib. Hyperparameter and reward optimization was done with Ray Tune

Fun fact

This project was the subject of my Bachelor thesis and was originally implemented in C# on Unity with Unity ML-Agents. An interface was developed specifically for the project, allowing workshop configurations to be created directly in Unity. When I joined HE-Arc as a research assistant, the project was still in progress, and I was assigned to continue its development. I was able to make significant improvements to the project while continuing to advance its goals.